-

Notifications

You must be signed in to change notification settings - Fork 244

How to Perform a Performance Test on AutoMQ

AutoMQ architecture is based on S3 shared storage, ensuring 100% compatibility with Apache Kafka and offering advantages such as rapid elasticity, low cost, and high performance. Compared with Kafka, AutoMQ can provide better throughput performance during cold reads and higher peak throughput. Many customers are interested in the actual performance of AutoMQ. This tutorial will guide users on how to perform performance testing on AutoMQ using AWS.

- AutoMQ has been correctly deployed on AWS following the official deployment documentation.

The AutoMQ Console is used to manage specific data plane clusters. Following the process in the official deployment documentation, deploying the AutoMQ Console can be completed with a single click through AWS Marketplace.

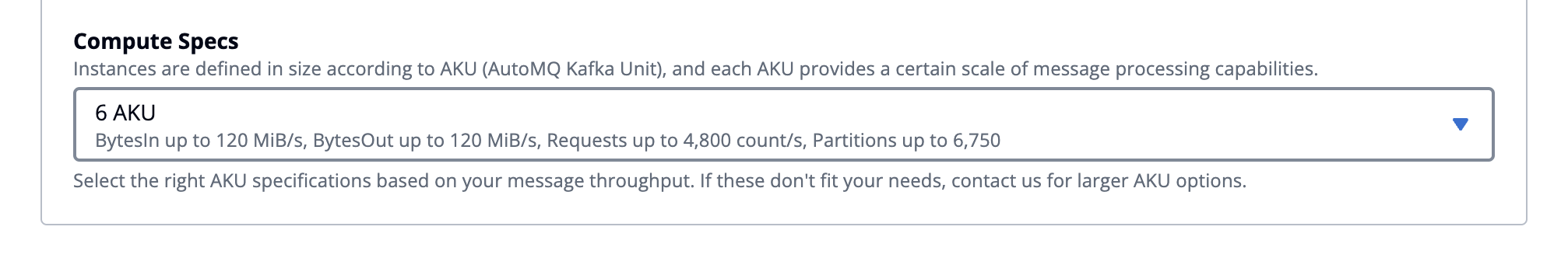

In the AutoMQ console, follow the official documentation to create a cluster with a specification of 6 AKU. 6 AKU indicates a recommended throughput of 120 MiB/s for writes and reads (with a 1:1 read-write ratio), using 3 r6i.large instances at the underlying layer.

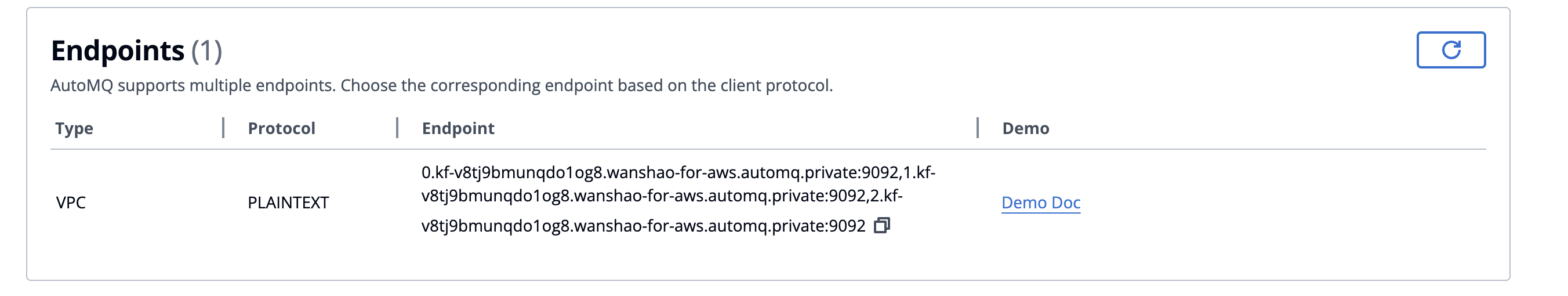

After the cluster is created, we can obtain the access point address from the cluster instance details page:

We define AKU (AutoMQ Kafka Unit) to describe the maximum load capacity an AutoMQ cluster can handle. The limitations of AKU can be divided into two categories:

-

Fixed Limitations: No exceptions can be made under any circumstances. These include

- Partitions: A single AKU can create up to a maximum of 1,125 partitions.

-

Recommended Limitations: May vary depending on cluster load in different scenarios. These include

-

Read/Write Throughput: The recommended read/write throughput that a single AKU can handle varies based on the production-to-consumption ratio.

-

For a 1:1 production-to-consumption ratio: One AKU is recommended to handle 20 MiB/s of write throughput and 20 MiB/s of read throughput.

-

When producing and consuming at a 1:3 ratio: One AKU is recommended to handle 12.5 MiB/s of write throughput and 37.5 MiB/s of read throughput. It is evident that in high fan-out scenarios, AutoMQ supports relatively higher total traffic, which is due to the higher write cost compared to read cost in AutoMQ.

-

-

Request Frequency: It is recommended to limit the number of requests per second to 800.

-

As mentioned earlier, in some scenarios, the recommended limit can be exceeded, but this is typically at the cost of other metrics. For example, when the read and write traffic in the cluster is below the AKU limit, the request frequency can exceed the AKU limit.

Additionally, in some extreme cases, it may not be possible to achieve a certain metric. For instance, when the number of partitions in the cluster reaches the limit, and all read traffic is "cold reads" (i.e., consuming older data), it may not be possible to reach the AKU recommended read throughput limit.

Prepare a machine within the same VPC as the AutoMQ data plane to generate load. In this instance, we use an m6i.large machine, which offers over 100 MiB/s of network bandwidth by default, to generate sufficient write pressure.

Download the Community Edition code from the AutoMQ GitHub repository Release, where we provide the automq-perf-test.sh tool. This tool is implemented with reference to the core logic of the OpenMessaging Benchmark framework. It has the following advantages:

-

Compared to Apache Kafka's built-in kafka-producer-perf-test.sh and kafka-consumer-perf-test.sh scripts, automq-perf-test.sh supports launching multiple Producers and Consumers within a single process and sending and receiving Messages to/from multiple Topics, making it more suitable for real-world scenarios and more convenient to use.

-

Compared to the OpenMessaging Benchmark testing framework, it no longer requires distributed deployment of multiple Workers, and tests can be executed with a single machine with one click. For less extreme test scenarios, it is easier to deploy and use.

-

Additionally, the automq-perf-test.sh script also supports more complex cold read test scenarios—it can launch multiple Consumer Groups, with each Group consuming from different offsets, thus avoiding cache reuse during cold reads. This enables testing cold read performance under more extreme conditions.

-

Because this test script only relies on the Apache Kafka Client, it can support performance testing for stream systems compatible with the Kafka protocol such as Apache Kafka and MSK.

In addition, to ensure the proper functioning of the load generation tool, a Java environment with JDK version 17 or higher is required.

Before introducing specific stress testing scenarios, we will first briefly explain how to use the automq-perf-test.sh script.

-

--bootstrap-server: Specifies the initial connection nodes for the Kafka cluster, formatted as "host1:port1,host2:port2". It's important to note that these addresses are solely used for the initial connection to the Kafka cluster to fetch the cluster metadata, so you don't need to provide the addresses of all the brokers in the cluster. Just a few running and accessible addresses will suffice. -

--common-configs: Specifies common configurations for the Kafka Admin Client, Producer, and Consumer, such as authentication-related configurations. -

--topic-configs: Specifies configurations related to Topics, such as message retention time. -

--producer-configs: Specifies configurations for the Producer, such as batch size, batch time, compression method, etc. -

--consumer-configs: Specify consumer-related configurations such as the maximum size of a message pull operation, and so on. -

--reset: Decide whether to delete all existing topics in the cluster before running the benchmark. -

--topic-prefix: The prefix for topics used in testing. -

--topics: The number of topics created during testing. -

--partitions-per-topic: The number of partitions in each topic. The total number of partitions used in the test is calculated as--topics*--partitions-per-topic. -

--producers-per-topic: The number of Producers created per Topic. The total number of Producers used for testing equals --topics * --producers-per-topic. -

--groups-per-topic: The number of Consumer Groups per Topic, indicating the read-write ratio (fan-out) during testing. -

--consumers-per-group: The number of Consumers in each Consumer Group. The total number of Consumers used for testing equals--topics*--groups-per-topic*--consumers-per-group. -

--record-size: The size of each message sent by the Producer, measured in bytes. -

--send-rate: The total number of messages sent per second by all Producers. The write throughput during testing equals--record-size*--send-rate. -

--random-ratio: The ratio of random data in the message, commonly used for testing scenarios where the Producer enables compression. The value ranges from 0.0 to 1.0. The larger the value, the more random data in the message, and theoretically, the worse the compression efficiency; the default is 0.0, meaning each message is completely identical. -

--random-pool-size: The size of the random message pool, from which a message is randomly selected each time a message is sent. This option is only effective when the--random-ratiois greater than 0. -

--backlog-duration: Used in catch-up read test scenarios to control the duration of message backlog, in seconds.--record-size*--send-rate*--backlog-durationis the size of the message backlog before the catch-up read. -

--group-start-delay: Used in catch-up read test scenarios to control the interval of the starting point for consumption by each Consumer Group during the catch-up read, in seconds. Setting this option can stagger each Consumer Group's consumption progress to avoid cache reuse, thereby better simulating real catch-up read scenarios. -

--send-rate-during-catchup: Used in catch-up read test scenarios to control the Producer’s send rate during the catch-up read period, defaulting to--send-rate. -

--warmup-duration: The duration of the warmup phase before testing begins, specified in minutes. During the warmup period, the Producer's send rate will gradually increase to--send-rateover the first 50% of the time, and then be maintained at--send-ratefor the remaining 50%. Metrics collected during the warmup period will not be included in the final results. To ensure the JVM is adequately warmed up, it is recommended to set--warmup-durationto 10 minutes or more. -

--test-duration: The duration of the formal test, specified in minutes. This is only applicable in non-lagging read test scenarios (when `--backlog-duration` is less than or equal to 0). -

--reporting-interval: The interval for collecting and reporting metrics during the test, specified in seconds.

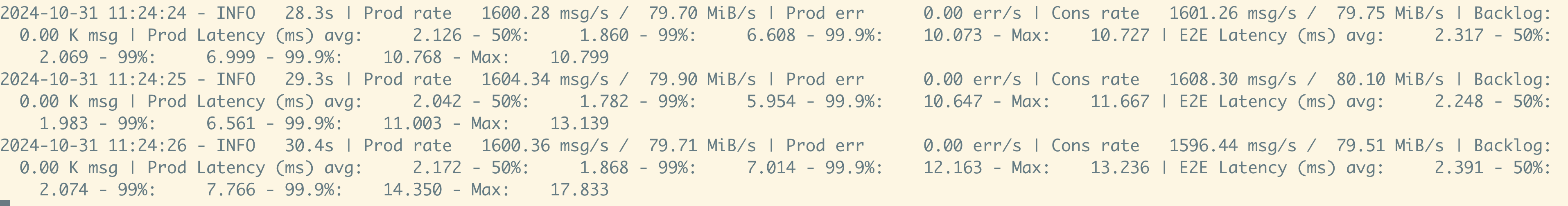

During the test run, the following outputs will be printed periodically, indicating the metrics for the most recent --reporting-interval period:

2024-11-06 16:17:03 - INFO 230.0s | Prod rate 803.83 msg/s / 50.24 MiB/s | Prod err 0.00 err/s | Cons rate 2411.49 msg/s / 150.72 MiB/s | Backlog: 0.00 K msg | Prod Latency (ms) avg: 1.519 - 50%: 1.217 - 99%: 4.947 - 99.9%: 11.263 - Max: 11.263 | E2E Latency (ms) avg: 1.979 - 50%: 1.595 - 99%: 6.264 - 99.9%: 9.451 - Max: 9.735

The meanings of each field are as follows:

-

"Prod rate": The number and size of messages sent per second.

-

"Prod err": The frequency of errors occurring during message sending.

-

"Cons rate": The number and size of messages consumed per second.

-

"Backlog": The number of messages by which the Consumer lags behind the Producer.

-

"Prod Latency": The delay experienced by the Producer in sending messages, with "avg," "50%," "99%," "99.9%," and "Max" representing the average latency, P50 percentile, P99 percentile, P999 percentile, and the maximum latency, respectively.

-

"E2E Latency": The delay from when each message is sent to when it is consumed by the Consumer.

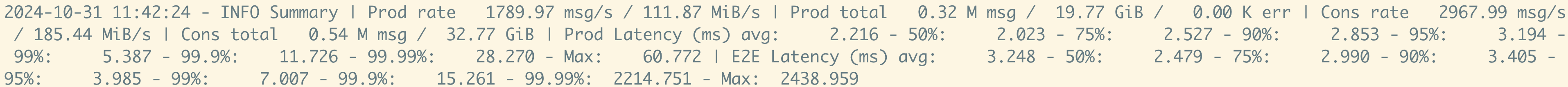

At the end of the test run, the following output will be printed, summarizing the relevant metrics during the test period:

2024-11-06 16:18:13 - INFO Summary | Prod rate 800.78 msg/s / 50.05 MiB/s | Prod total 0.24 M msg / 14.68 GiB / 0.00 K err | Cons rate 2402.35 msg/s / 150.15 MiB/s | Cons total 0.72 M msg / 44.03 GiB | Prod Latency (ms) avg: 1.614 - 50%: 1.225 - 75%: 1.601 - 90%: 2.451 - 95%: 3.705 - 99%: 7.150 - 99.9%: 16.706 - 99.99%: 40.361 - Max: 136.785 | E2E Latency (ms) avg: 2.126 - 50%: 1.574 - 75%: 2.013 - 90%: 3.230 - 95%: 4.718 - 99%: 10.634 - 99.9%: 30.488 - 99.99%: 46.863 - Max: 142.817

The meaning of each field is consistent with the previous text.

Tail Read, also known as "tail read" or "hot read," tests scenarios where the position gap between the Producer and Consumer is minimal. In this scenario, the messages sent by the Producer are consumed by the Consumer immediately after being written to the Broker. At this time, the messages consumed by the Consumer come directly from the Log Cache, without the need to read from object storage, resulting in lower resource consumption.

The following use case tests the Tail Read performance of AutoMQ:

-

The production and consumption traffic ratio is 1:1.

-

Data will be written into 1280 partitions across 10 Topics.

-

1600 messages of size 51 KiB each will be written per second (without any batching), at a write speed of 80 MiB/s.

Please ensure that before executing the following script, you replace the --bootstrap-server address with the actual AutoMQ endpoint address.

KAFKA_HEAP_OPTS="-Xmx12g -Xms12g" ./bin/automq-perf-test.sh \

--bootstrap-server 0.kf-v8tj9bmunqdo1og8.wanshao-for-aws.automq.private:9092,1.kf-v8tj9bmunqdo1og8.wanshao-for-aws.automq.private:9092,2.kf-v8tj9bmunqdo1og8.wanshao-for-aws.automq.private:9092 \

--producer-configs batch.size=0 \

--consumer-configs fetch.max.wait.ms=1000 \

--topics 10 \

--partitions-per-topic 128 \

--producers-per-topic 1 \

--groups-per-topic 1 \

--consumers-per-group 1 \

--record-size 52224 \

--send-rate 1600 \

--warmup-duration 10 \

--test-duration 5 \

--reset

Tips: Generally, AutoMQ recommends that a single partition support 4 MiB/s of write throughput. You can determine the number of partitions needed for a Topic based on this value. With the recommended configuration, AutoMQ ensures efficient cold read performance.

Execution Results

Upon completion, a time-stamped report JSON file, such as perf-2024-10-31-11-24-57.json, will be generated in the current directory. The output will be consistent with the OpenMessaging Benchmark results.

In our testing, AutoMQ clusters maintained single-digit millisecond P99 write latency at a write throughput of 80 MiB/s, with no message backlog.

Catch-Up Read, also known as "catch-up read" or "cold read," tests scenarios where the Consumer's consumption offset significantly lags behind the Producer's offset. In this scenario, the Consumer is first paused, allowing messages to accumulate to a certain size before resuming consumption. At this point, the Consumer reads messages from Object storage, with Block Cache pre-reading and caching the data.

In this test scenario, focus on the following key metrics:

-

- Catch-up read speed. Observe whether the consumption speed of each Consumer Group exceeds the Producer's write speed. Only if it exceeds the write speed can the Consumer catch up with the Producer.

-

- Impact of catch-up read on write throughput. Observe whether the Producer's message sending throughput decreases and whether the sending latency increases during the catch-up read process.

The following use case tests the Catch-Up Read performance of AutoMQ. This test case:

-

The production-to-consumption traffic ratio is 1:3.

-

Data will be written into 1280 partitions across 10 Topics.

-

Each second, 800 messages of size 64 KiB will be written without any batching, resulting in a write speed of 50 MiB/s.

-

Before catch-up reads begin, 600 seconds worth of data (approximately 30 GiB) will accumulate. Subsequently, 3 Consumer Groups will begin catch-up reads, with each Group starting 30 seconds apart (approximately 1.5 GiB).

Please ensure that before executing the following script, you replace the --bootstrap-server address with the actual AutoMQ endpoint address.

KAFKA_HEAP_OPTS="-Xmx12g -Xms12g" ./bin/automq-perf-test.sh \

--bootstrap-server 0.kf-hsd29pri8q5myud5.wanshao-for-aws.automq.private:9092,1.kf-hsd29pri8q5myud5.wanshao-for-aws.automq.private:9092,2.kf-hsd29pri8q5myud5.wanshao-for-aws.automq.private:9092 \

--producer-configs batch.size=0 \

--consumer-configs fetch.max.wait.ms=1000 \

--topics 10 \

--partitions-per-topic 128 \

--producers-per-topic 1 \

--groups-per-topic 3 \

--consumers-per-group 1 \

--record-size 65536 \

--send-rate 800 \

--backlog-duration 600 \

--group-start-delay 30 \

--warmup-duration 5 \

--reset

Execution Results

From the result output information, we can observe that AutoMQ's write performance remains entirely unaffected during cold reads.

Below are some common issues and solutions commonly encountered during performance testing.

During testing, a common issue encountered is that test results do not meet expectations, such as lower than set transmission rates and high transmission delays. The root cause of this issue can be broadly categorized into high client-side pressure and high server-side pressure. Below, we provide some common troubleshooting and resolution methods.

Among different cloud providers, various instances have different network bandwidth limits, which are divided into baseline (the minimum guaranteed bandwidth) and burst (the maximum achievable bandwidth) types.

If you attempt to run a high-throughput load on a machine with relatively limited network bandwidth, it will result in production or consumption rates below expectations. For example, on a client machine with a network bandwidth of 128 MBps, if you try to run a load of 50 MiB/s write + 150 MiB/s read, you will find that the read throughput will not exceed 128 MiB/s.

When selecting client models, it is recommended to choose based on testing load requirements, ensuring that the network baseline performance meets the demand to avoid the aforementioned issues.

The `automq-perf-test.sh` script uses ZGC as the default JVM Garbage Collector. Compared to the traditional G1GC, ZGC consumes more CPU but has a much shorter Stop-the-World (STW) time. However, when the CPU is exhausted or the heap memory usage is too high, ZGC can degrade (Allocation Stall), resulting in a significant increase in STW time, which in turn leads to higher client latency.

You can check the ZGC logs to determine if there is an issue:

grep "Garbage Collection (Allocation Stall)" ./logs/kafkaClient-gc.log

If relevant logs are present, it indicates that the GC pressure on the client side is too high. You can try to resolve the issue by using the following methods:

-

Increase the number of CPUs

-

Increasing JVM Heap Size

When the Client's CPU utilization is excessively high, it may result in increased latency for Producers and Consumers on the Client side, thereby causing an overall increase in delay. Generally, to avoid system-wide delays caused by high CPU usage, it is recommended to keep the Client CPU utilization below 70% during Benchmark testing.

If you are testing a cluster for the first time, an empirical estimate is that the number of CPUs on the Client side should be equal to or slightly less than the number of CPUs on the Server side, which is likely to meet the testing requirements. If the Server side's capacity is measured by AKU, then the required number of Client-side CPUs is approximately AKU * 0.8.

When the CPU load on the Client side is too high, apart from scaling up, you can also consider reducing the load to lessen the Client-side pressure, such as:

-

Reduce the number of Producers and Consumers.

-

Reduce the number of Partitions per Topic (In the automq-perf-test.sh script, each Producer will send messages to all Partitions of the Topic. It is recommended that --partitions-per-topic does not exceed 128 to avoid high pressure on a single Producer).

-

Increase --record-size and decrease --send-rate.

When the read and write traffic on the Server side is too high, it may exceed the machine's network bandwidth limit, leading to network throttling and a decline in read and write throughput.

At this time, you can

-

Reduce Throughput

-

Enable Producer compression by setting the `compression.type` configuration. Note that enabling compression can increase CPU load on both the client and server.

High server-side request rates (Produce + Fetch) can lead to increased CPU utilization, resulting in higher request latency.

At this time, you can

-

reduce the request frequency

-

by increasing the level of batching on the client side. Specifically,

-

For the Producer, you can increase `batch.size` and `linger.ms` to enlarge the size of the Record Batches in each Produce request, thereby reducing the frequency of Produce requests.

-

For the Consumer, you can increase `fetch.max.wait.ms` to extend the wait time on the Broker side for Fetch requests, thereby reducing the frequency of Fetch requests.

-

For estimating the Produce request frequency, the following method can be used:

Every Record Batch size(BatchSize) = min(

"batch.size",

"--send-rate" ÷ "--topics" ÷ "--producers-per-topic" ÷ (1000 ÷ "linger.ms") ÷ "--partitions-per-topic"

)

Total request frequency of cluster Produce = "--record-size" * "--send-rate" / BatchSize

When a large number of Producers with higher send latency are used in testing, a significant number of messages may accumulate in the Producer's send buffer, which can lead to JVM memory exhaustion and OutOfMemoryError (OOM).

You can prevent this by limiting the memory used by the Producer:

-

Reduce the number of Producers

-

Lower the buffer.memory setting of the Producer configurations

When there is a large number of Brokers and Partitions in the cluster, and traffic is fluctuating, you may sometimes observe intermittent jitter in higher percentiles of Produce Latency (e.g., P99) at intervals of one minute or several minutes—where latency spikes briefly, lasting for 1 to 3 seconds.

This is caused by AutoMQ's AutoBalancer executing automatic Partition reassignments. AutoMQ regularly checks the load on each Broker in the cluster, and when some Brokers have significantly higher loads, it attempts to reassign some Partitions to other Brokers to achieve a more balanced load. During the Partition reassignment period, there will be a brief time (second-level) where the Partitions are unavailable, causing jitter in Producer sending latency.

This article primarily guides users on how to use the automq-perf-test.sh tool to perform performance testing on a Kafka cluster. With this tool, users can construct different workloads to verify AutoMQ's performance under various hot read and cold read scenarios.

[1] Best practices for right-sizing your Apache Kafka clusters to optimize performance and cost: https://aws.amazon.com/cn/blogs/big-data/best-practices-for-right-sizing-your-apache-kafka-clusters-to-optimize-performance-and-cost/

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

-

S3stream shared streaming storage

-

Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

-

Data analysis

-

Object storage

-

Kafka ui

-

Observability

-

Data integration