-

Notifications

You must be signed in to change notification settings - Fork 108

Wide & Deep Learning on Shifu

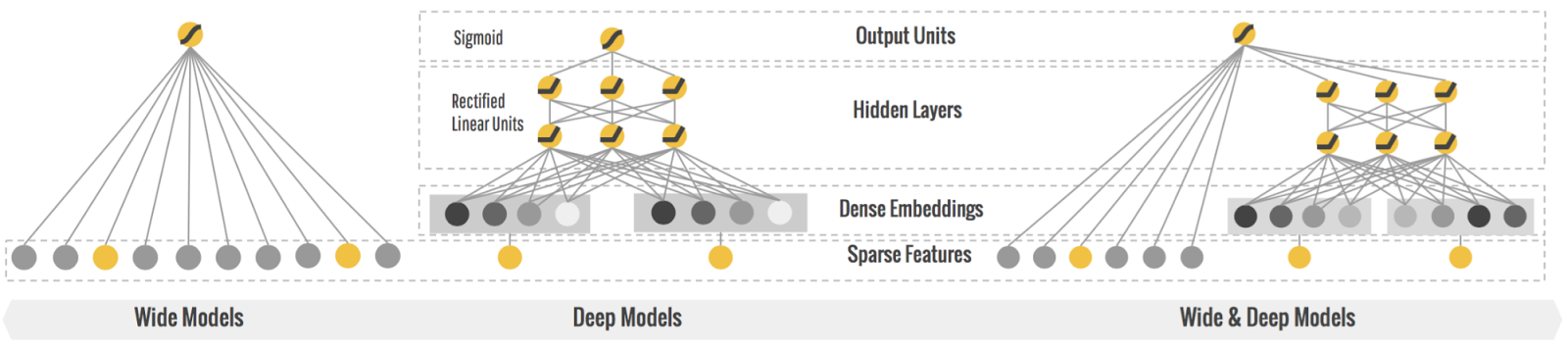

Deep learning is widely used in the machine learning area for its stronger ability in generalization. But when considering memorization, it may not as efficient as wide models. So can we combine the wide and deep model together? That's a brilliant idea that lead to the wide and deep model. A detailed introduction about the wide & Deep learning models you can see this google blog.

We have implemented the wide & deep learning model(WDL) in Shifu based on the following consideration:

- Provide user ability to use a wide and deep learning model in Shifu with limited changes in the config file.

- Provide users a choice for large amounts of sparse features and category features. We have implemented the model with feature well support sparse inputs and embed inputs.

- The wide and deep learning model is the first step for this category of models. Based on this, we will adopt more models into Shifu, such as wide and GBDT models.

In our latest test, the performance of the WDL model has reached an accuracy of 81.2%. Compare with the WDL in the TensorFlow model, the Shifu version is faster for a large amount of training data. From our statistics, it usually 5~7 seconds for one iteration on 100GB training data set.

Running WDL in SHIFU is very easy. The only change from Shifu NN is ModelConfig.json: "train"->"algorithm" to "WDL" and specify the "NumEmbededColumnIds".

Compared with Shifu native NN, more parameters can be tuned in train#params part if 'WDL' is selected in train#algorithm:

"train" : {

"baggingNum" : 1,

"baggingWithReplacement" : true,

"baggingSampleRate" : 1.0,

"validSetRate" : 0.2,

"numTrainEpochs" : 200,

"isContinuous" : true,

"algorithm" : "WDL",

"params" : {

"NumHiddenLayers" : 1,

"ActivationFunc" : [ "tanh" ],

"NumHiddenNodes" : [ 50 ],

"LearningRate" : 0.002,

"MiniBatchs" : 128,

"NumEmbedColumnIds": [2,3,5,6,7,9,10,11,12,13,14,16,17,20,21,22,23,24,25,32,35,37,40,124,125,128,129,238,266,296,298,300,319,384,390,391,402,403,406,407,408,409,410,411,412,413,414,415,416,417,418,420,421,441,547,549,550,552,553,554,555,627,629],

"L2Reg": 0,

"Loss": "squared"

}

},- train::baggingNum: bagging of TensorFlow is not supported yet, working on it, no need set baggingNum, baggingWithReplacement and baggingSampleRate now;

- train::numTrainEpochs: how many training epochs in the training, this is current stop hook inside of TensorFlow training;

- train::isContinuous: if continuous training, set it to true is helpful if some occasional failure, restart job will start from failure point.

- train::params::LearningRate: Learning rate isn't like Native Shifu NN, recommendation value is much smaller like 0.002.

- train::params::MiniBatchs: mini batch size, by default is 128;

- train::params::RegularizedConstant: l2 regularization value in TensorFlow training, by default is 0.01;

- train::params::NumEmbedColumnIds: A list of embed column ids which are choosing from category features.

- train::params::Propagation: optimizer used in TensorFlow training, by default 'Adam' is AdamOptimizer, others can be specified 'B'(GradientDescentOptimizer), 'AdaGrad'(AdagradOptimizer).

More detail guide on step by step training a Wide and Deep model in Shifu see Tutorial Build Your First WDL Model

Like other models in Shifu, we have leveraged the Guagua framework for the model training.

Based on the Guagua framework, to support the Wide & Deep learning algorithm in the distributed system, we have built the whole training system with one WDL master and multiple WDL workers.

WDL Master Functions:

- Initial a new WDL model based on the config.

- Retrieve gradients from all workers and aggregation gradients together.

- Update weights in the WDL model and send out to all workers.

WDL Worker Functions:

- Initial and load one part of all training data in memory.

- Retrieve the latest WDL model and batch training on training set in memory.

- Compute gradients and send out to master.

The structure of the WDL model see as below:

From the top level of the Wide & Deep model, it combines a wide layer and a deep layer. We combine these two layers outputs with a linear regression function and generate the final output with sigmoid function (Configurable).

- Wide Layer

In the wide layer, it consists of multiple wide filed layers and one wide dense layer. Each wide layer stands for one category feature. Instead of using one-hot encoding, we support sparse input as the wide-field layer input. These can save both memory usage and compute resources. The wide dense layer is an LR model with all numerical features as input. - Dense Layer

The dense layer, it is a deep neural network with multi hidden layers and support both numerical feature and embed category features as input.

In machine learning, it's a common case to adopt one-hot encoding for category feature. But it has two disadvantages.

- For a large dimension category, it may have more than a hundred inputs, but it only contains one non-zero value. So it was a waste of memory to store these inputs.

- It also a waste of computing resources to update these zero fields. See from below images, all grey line updates are a waste of computing resources as the input value are zeros.

As for the above case, we need to store 28 bytes (7 floats) for a single input and 7 * 4 float multiple for the update.

So we design a more memory saving and compute resource model called Sparse Input.

SparseInput Class

Based on this structure, we can reduce the memory storage from 28 bytes to 8 bytes (2 ints). Also, the computing resources usage can reduce from 28 times multiplication to 4 times multiplication.

Besides the full batch training, we also support miniBatch in the WDL training process. This can be enabled by a single configure change.

```json

"train" : {

"baggingNum" : 1,

"baggingWithReplacement" : false,

"baggingSampleRate" : 1.0,

"validSetRate" : 0.1,

"numTrainEpochs" : 1000,

"isContinuous" : true,

"workerThreadCount" : 4,

"algorithm" : "WDL",

"params" : {

"wideEnable" : true,

"deepEnable" : true,

"embedEnable" : true,

"Propagation" : "B",

"LearningRate": 0.5,

"MiniBatchs": 4000,

"NumHiddenLayers": 1,

"NumEmbedColumnIds": [2,3,5,6,7,9,10,11,12,13,14,16,17,20,21,22,23,24,25,32,35,37,40,124,125,128,129,238,266,296,298,300,319,384,390,391,402,403,406,407,408,409,410,411,412,413,414,415,416,417,418,420,421,441,547,549,550,552,553,554,555,627,629],

"ActivationFunc": ["tanh"],

"NumHiddenNodes": [30],

"WDLL2Reg": 0.01

}

},

```

"MiniBatchs": configure how many records for one mini-batch in the training process.

We are continue tuning and improving the model accuracy and performance. With the latest test, we have achived 81.2% catch rate and auc value 0.9529413011874155.